Why AI Tools Should Be Taught as a Basic Skill at Universities — Not Treated as Cheating

Opinion piece . Student lifeFor many university students today, the word “AI” has quietly become synonymous with suspicion. Course syllabi warn against its use, lecturers issue vague prohibitions, and students are left wondering whether opening an AI tool already counts as cheating. Treating AI tools as academic misconduct, however, is not only unrealistic — it is academically irresponsible. Universities should be teaching students how to use AI critically and ethically, rather than pretending it does not belong in higher education.

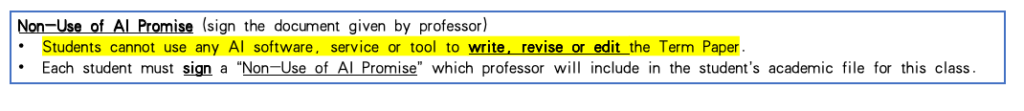

One of the University guidelines on AI use at the author’s home institution (Source: Selina’s Photos)

The reality outside the classroom has already changed. In journalism, business, policy analysis, and even academic research, AI-assisted tools are becoming part of everyday workflows. Employers increasingly expect graduates to know how to work with digital tools efficiently, evaluate automated outputs, and make informed decisions based on them. Universities claim to prepare students for the professional world, yet banning AI does the opposite. It creates a growing gap between academic training and real-world expectations, leaving graduates technically underprepared the moment they step outside campus.

Moreover, banning AI does not protect academic integrity — it undermines it. When clear guidance is missing, students respond in different ways. Some secretly use AI tools without understanding their limitations or ethical implications, while others avoid them completely out of fear, even when such tools could support learning. This results in an uneven and opaque system where honesty is punished and silence is rewarded. Academic integrity should be about transparency and responsibility, not fear and confusion.

From my own experience, using AI does not automatically replace thinking — it can enhance it when used correctly. AI tools can help generate ideas, clarify structure, or improve language, especially for students working in a second language. They do not write arguments on their own, nor do they replace critical judgment. When I use AI as a support tool, I am still responsible for evaluating its suggestions, correcting its mistakes, and shaping my own voice. Without guidance, however, students are left to experiment blindly, increasing the risk of misuse rather than reducing it.

Universities already teach students how to use calculators, academic databases, and citation software responsibly. AI should be treated no differently. Instead of blanket bans, universities could integrate AI literacy into their curricula: explaining what AI can and cannot do, setting clear boundaries for acceptable use, and encouraging reflection on ethical concerns. Teaching responsible use empowers students to make informed choices, rather than forcing them into secrecy.

Ultimately, universities face a choice. They can cling to outdated rules that ignore technological reality, or they can adapt and lead. Ignoring AI will not make it disappear, and banning it will not make students more honest. Teaching AI as a basic academic skill, however, can make higher education more relevant, fair, and future-oriented. Responsible use is not a threat to education — it is the future of it.

Leave a Reply